Load local or remote MCAP files containing custom data (i.e. Protobuf, JSON, FlatBuffers), or connect directly to live custom data via a Foxglove WebSocket connection.

Live data

Connect directly to your live custom data (i.e. Protobuf, JSON, FlatBuffers) with an encoding-agnostic WebSocket connection.

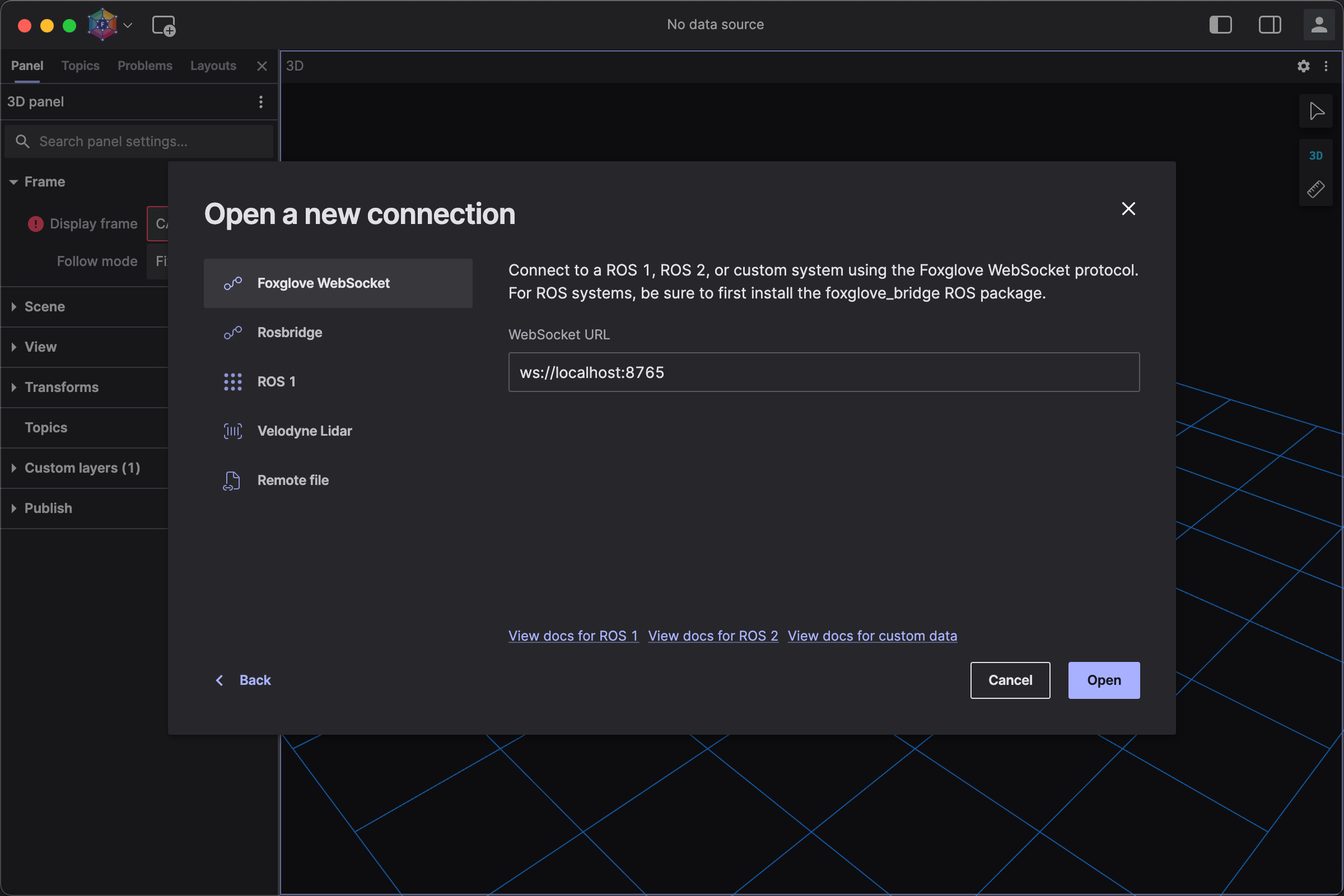

In Foxglove, select "Open connection" in the "Open data source" dialog.

Foxglove WebSocket

Create your own server to start publishing your custom data.

The foxglove/ws-protocol repo provides:

- Package for building custom servers and clients (C++, Python, JavaScript/TypeScript)

- Templates for custom servers (C++, Python, JavaScript/TypeScript) and clients (JavaScript/TypeScript)

The @foxglove/ws-protocol-examples npm package also provides example servers and clients that you can run to see how Foxglove can receive system stats and image data from a custom WebSocket server:

$ npx @foxglove/ws-protocol-examples sysmon

$ npx @foxglove/ws-protocol-examples image-serverConnect

Select "Foxglove WebSocket" in the "Open data source" dialog, and enter the URL to your WebSocket server:

Remote file

Once you have an MCAP file in remote storage, follow the MCAP directions for opening it via URL in Foxglove.

Local data

Load custom data into Foxglove by first encoding it in MCAP (.mcap) files – see supported data formats here.

Imported data

Once converting your custom data to MCAP files and uploading them to Foxglove, you can stream them directly for visualization.

Schema encodings

Both MCAP-based and Foxglove WebSocket sources support several message and schema encodings.

JSON

For JSON data, use schema encoding "jsonschema" and message encoding "json".

Schemas are required for Foxglove WebSocket, and must be a JSON Schema definition with "type": "object".

Each message should be UTF8-encoded JSON representing an object. Binary data should be represented as a base64-encoded string in the JSON object, and should use "contentEncoding": "base64" (e.g. { "type": "string", "contentEncoding": "base64" }) in the schema.

NOTE: Foxglove does not support the use of JSON references ($ref).

Protobuf

For Protobuf data, use schema encoding "protobuf" and message encoding "protobuf".

Foxglove expects the schema data to be a binary FileDescriptorSet. For Foxglove WebSocket connections, the schema must additionally be base64-encoded because it is represented as a string.

You can generate the FileDescriptorSet offline using:

protoc --include_imports --descriptor_set_out=MySchema.bin --proto_path=<path to proto directory> MySchema.protoOr generate it at runtime using Python:

from typing import Any, Set

from google.protobuf.descriptor import FileDescriptor

from google.protobuf.descriptor_pb2 import FileDescriptorSet

def build_file_descriptor_set(message_class: Any) -> FileDescriptorSet:

file_descriptor_set = FileDescriptorSet()

seen_dependencies: Set[str] = set()

def append_file_descriptor(file_descriptor: FileDescriptor):

for dep in file_descriptor.dependencies:

if dep.name not in seen_dependencies:

seen_dependencies.add(dep.name)

append_file_descriptor(dep)

file_descriptor.CopyToProto(file_descriptor_set.file.add())

append_file_descriptor(message_class.DESCRIPTOR.file)

return file_descriptor_setOr C++:

#include <google/protobuf/descriptor.pb.h>

// Writes the FileDescriptor of this descriptor and all transitive dependencies

// to a string, for use as a channel schema.

std::string SerializeFdSet(const google::protobuf::Descriptor* toplevelDescriptor) {

google::protobuf::FileDescriptorSet fdSet;

std::queue<const google::protobuf::FileDescriptor*> toAdd;

toAdd.push(toplevelDescriptor->file());

std::unordered_set<std::string> added;

while (!toAdd.empty()) {

const google::protobuf::FileDescriptor* next = toAdd.front();

toAdd.pop();

next->CopyTo(fdSet.add_file());

added.insert(next->name());

for (int i = 0; i < next->dependency_count(); ++i) {

const auto& dep = next->dependency(i);

if (added.find(dep->name()) == added.end()) {

toAdd.push(dep);

}

}

}

return fdSet.SerializeAsString();

}Foxglove also expects schemaName to be one of the message types defined in the FileDescriptorSet.

Each message should be encoded in the Protobuf binary wire format – we provide .proto files compatible with Foxglove in our foxglove/schemas repo.

NOTE: For Protobuf data, time values of type google.protobuf.Timestamp or google.protobuf.Duration will appear with sec and nsec fields (instead of seconds and nanos) in user scripts, message converters, and the rest of Foxglove, for consistency with time and duration types in other data formats.

FlatBuffers

For FlatBuffers data, use schema encoding "flatbuffer" and message encoding "flatbuffer".

Foxglove expects the schema data to be a binary-encoded FlatBuffers schema (.bfbs) file, compiled from the source FlatBuffers schema (.fbs) file. For Foxglove WebSocket connections, the schema must additionally be base64-encoded because it is represented as a string. Use the FlatBuffers schema compiler to generate the .bfbs files:

$ flatc --schema -b -o <PATH_TO_BFBS_OUTPUT_DIR> <PATH_TO_FBS_INPUT_DIR>We provide .fbs files compatible with Foxglove in our foxglove/schemas repo.

Check out this example that uses Foxglove schemas to write FlatBuffers-encoded data to an MCAP file for more details.

ROS 1 and ROS 2

For ROS 1 data, use schema encoding "ros1msg" and message encoding "ros1".

For ROS 2 data, use schema encoding "ros2msg" or "ros2idl" and message encoding "cdr".

Foxglove expects the schema data to be a concatenation of the referenced .msg or .idl file and its dependencies. For more information about the concatenated format, see the MCAP specification appendix.

OMG IDL

For IDL schemas with CDR data, use schema encoding "omgidl" and message encoding "cdr".

For encoding OMG IDL schemas into MCAP, use the conventions listed in the MCAP Format Registry.

Known limitations can be found here in foxglove/omgidl library internally used by Foxglove:

If there's an IDL feature you want to see supported, create a community discussion or file an issue on the Foxglove OMG IDL GitHub repo.

All Foxglove-compatible .idl files are available in the foxglove/schemas repo.